We stand at a fascinating, and slightly terrifying, crossroads. Artificial Intelligence is no longer a far-off sci-fi concept; it’s a silent partner in our daily lives. It recommends our next binge-watch, filters our job applications, helps doctors spot diseases, and even drives our cars. The power is undeniable. The potential is immense.

But with great power comes… well, you know the rest.

As we race to build smarter, faster, and more capable AI, a crucial, parallel conversation is finally taking center stage: the conversation about AI ethics. It’s the essential, often-overlooked instruction manual for the most powerful tool humanity has ever created.

This isn’t just a topic for philosophers in ivory towers or developers in Silicon Valley. AI ethics affects everyone. So, let’s pull back the curtain and have a plain-English conversation about the moral maze of AI, what the real challenges are, and how we can begin to navigate them.

What Is AI Ethics, Really?

At its core, AI ethics is a field of study and practice focused on ensuring that AI technologies are developed and used in a way that is morally sound, fair, and beneficial to humanity.

It’s about moving beyond what AI can do and focusing on what it should do.

Think of it like this: A brilliant engineer can build a powerful engine. But it takes a different kind of thinking—involving designers, safety experts, and regulators—to decide whether that engine should go into a family minivan, a race car, or a weapon. AI ethics is that “different kind of thinking” for artificial intelligence.

Let’s break down the five core ethical pillars that everyone is talking about.

Pillar 1: The Bias Problem – “Garbage In, Gospel Out”

This is arguably the most immediate and tangible ethical challenge. The fundamental rule of machine learning is “garbage in, garbage out.” An AI model is only as good, and as fair, as the data it’s trained on. Since AI models are trained on massive datasets created by humans, they inevitably learn our existing societal biases.

- The Problem: If a hiring AI is trained on 20 years of a company’s hiring data where mostly men were promoted to leadership, the AI will learn that men are a better fit for leadership roles. It won’t do this maliciously; it will do it because that’s the pattern it sees. This can lead to AI systems that systematically discriminate against women, people of color, or other underrepresented groups.

- Real-World Example: Amazon famously scrapped a recruiting AI after discovering it penalized resumes that included the word “women’s” (as in, “captain of the women’s chess club”) and downgraded graduates of two all-women’s colleges.

- The Ethical Question: How do we prevent AI from inheriting and amplifying the worst of our human biases?

The Path Forward: This involves meticulously curating and cleaning training data, developing algorithms designed to promote fairness, and constantly auditing AI systems for biased outcomes. It’s a continuous process of digital gardening, weeding out the biases as they sprout.

Pillar 2: The Black Box Dilemma – Transparency and Explainability

Many of the most powerful AI models, particularly deep learning networks, operate as “black boxes.” We can see the data that goes in and the decision that comes out, but we can’t easily see why the AI made that specific choice.

- The Problem: An AI might deny someone a loan, flag a medical scan for cancer, or recommend a prison sentence. If it can’t explain its reasoning, how can we trust it? How can we appeal its decision? How do we know it didn’t make a mistake based on faulty data?

- Real-World Example: In healthcare, if an AI recommends a specific treatment, a doctor needs to understand its reasoning to verify the diagnosis and take responsibility for the patient’s care. A simple “yes” or “no” from a machine isn’t enough when a life is on the line.

- The Ethical Question: Do we have a right to an explanation from an algorithm that makes a critical decision about our lives?

The Path Forward: The field of Explainable AI (XAI) is dedicated to cracking open these black boxes. Researchers are developing techniques to make AI models more interpretable, so they can highlight the factors that led to a decision. This is crucial for building trust and accountability.

Pillar 3: The Privacy Invasion – Is Anything Private Anymore?

AI systems are data-hungry. They thrive on information—our photos, our voice commands, our search histories, our locations. The more they know about us, the better they can perform their tasks.

- The Problem: This creates a massive tension between AI performance and personal privacy. Voice assistants are “always listening” for a wake word. Facial recognition technology is being deployed in public spaces. Your online behavior is tracked and analyzed to create a scarily accurate psychological profile for targeted advertising.

- Real-World Example: The controversy around Clearview AI, a company that scraped billions of photos from social media to create a facial recognition database for law enforcement, highlights the profound privacy implications of large-scale data collection.

- The Ethical Question: How much of our personal data are we willing to trade for convenience and security? Where do we draw the line?

The Path Forward: Solutions include stronger data protection regulations like GDPR, a focus on data minimization (collecting only what is absolutely necessary), and developing on-device AI that can perform tasks without sending your personal data to the cloud (a trend we discussed in our last post on AI news!).

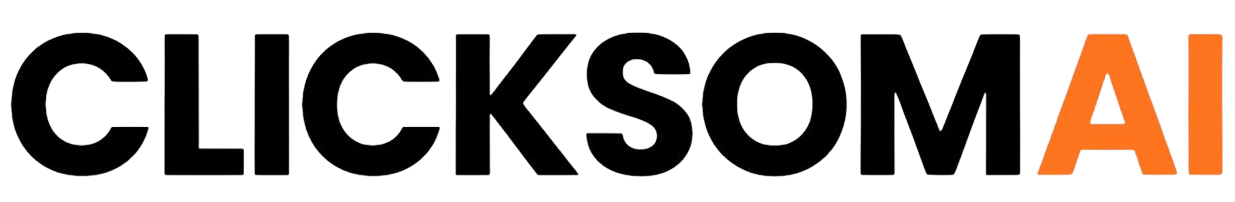

Pillar 4: Accountability – Who’s to Blame When AI Fails?

This is a legal and philosophical minefield. When an autonomous system makes a mistake that causes harm, who is responsible?

- The Problem: Imagine a self-driving car is in a situation where it must choose between hitting a pedestrian or swerving and harming its passenger. Who makes that decision? And if it makes the “wrong” choice, who is at fault? The owner of the car? The manufacturer? The programmer who wrote the code?

- Real-World Example: If an AI-powered surgical robot makes an error during an operation, is the surgeon liable? Is it the hospital? Or the company that built the robot? Our legal systems were not designed for non-human actors.

- The Ethical Question: How do we establish a chain of responsibility for autonomous systems?

The Path Forward: This is one of the most challenging areas. It requires a combination of clear regulation, robust testing and validation standards for high-stakes AI, and new legal frameworks that define liability for autonomous systems. The “blame game” is complex, and we are only just beginning to write the rules.

Pillar 5: The Future of Work and Society – Automation and Equity

The fear that “robots will take our jobs” has been around for decades, but with modern AI, it feels more real than ever. AI can now perform cognitive tasks once thought to be uniquely human—writing, coding, creating art, and analyzing complex data.

- The Problem: While AI will undoubtedly create new jobs (like “AI auditors” or “prompt engineers”), it will also displace many others. This could lead to massive economic disruption and exacerbate social inequality if the benefits of AI are concentrated in the hands of a few.

- The Ethical Question: What is our societal responsibility to those whose livelihoods are displaced by AI? How do we ensure the immense wealth generated by AI is distributed equitably?

The Path Forward: This is a societal-level challenge that goes beyond tech companies. It involves massive investment in education and reskilling programs, strengthening social safety nets, and potentially exploring radical ideas like Universal Basic Income (UBI). The goal is to manage the transition so that AI augments human potential rather than simply replacing it.

We Are All AI Ethicists Now

The development of artificial intelligence is too important to be left solely to the developers. Building a future with AI that is fair, just, and beneficial requires a global conversation. It requires us, as citizens, consumers, and professionals, to ask hard questions and demand better answers.

AI ethics isn’t a brake on innovation. It’s the steering wheel and the guardrails. It’s what will ensure that this incredible technology serves all of humanity, not just a select few. The moral compass for AI isn’t something we will find; it’s something we must build, together, one ethical decision at a time.