AI legal issues have become one of the most complex and rapidly evolving areas of law in 2025. Whether you’re a business owner using ChatGPT for marketing, a creative professional generating AI artwork, or an enterprise deploying automated decision-making systems, understanding AI legal issues is no longer optional—it’s essential for protecting yourself and your organization.

I’ve spent the past two years researching AI legal issues, consulting with intellectual property attorneys, and helping businesses navigate this complicated landscape. As someone who uses AI tools daily while also advising clients on responsible implementation, I’ve gained practical insights into the real-world legal risks and how to mitigate them effectively.

The legal landscape surrounding artificial intelligence is evolving faster than most people realize. Courts are issuing landmark rulings on AI copyright, governments worldwide are implementing comprehensive AI regulations, and businesses face new liability exposures that didn’t exist just two years ago.

In this comprehensive guide, I’ll break down the most important AI legal issues you need to understand. We’ll cover copyright and intellectual property, liability and accountability, regulatory compliance, employment law implications, and practical steps to protect yourself legally while still benefiting from AI technology.

Let’s navigate this complex terrain together.

Table of Contents

Why Understanding AI Legal Issues Matters Now

Before diving into specific legal areas, let’s establish why AI legal issues demand your immediate attention.

The Legal Landscape Is Shifting Rapidly

In 2023 alone, courts issued more rulings on AI-related cases than in the previous decade combined. The EU AI Act became law, creating the world’s first comprehensive AI regulatory framework. The U.S. Copyright Office issued multiple clarifications on AI-generated content. China implemented AI governance regulations affecting global businesses.

These developments create new AI legal issues that affect virtually everyone using AI technology.

Financial Risks Are Substantial

AI legal issues can result in significant financial consequences:

- Copyright infringement claims reaching millions in damages

- Regulatory fines under the EU AI Act up to €35 million or 7% of global revenue

- Product liability lawsuits for AI-caused harms

- Employment discrimination claims from biased AI systems

- Contract disputes over AI-generated deliverables

Ignorance Is Not a Defense

Many professionals assume that because AI is new, legal frameworks don’t apply or enforcement is lax. This assumption is dangerous. Courts and regulators are actively pursuing AI legal issues, and “I didn’t know” provides no protection.

Understanding AI legal issues now helps you make informed decisions, implement appropriate safeguards, and avoid costly legal problems down the road.

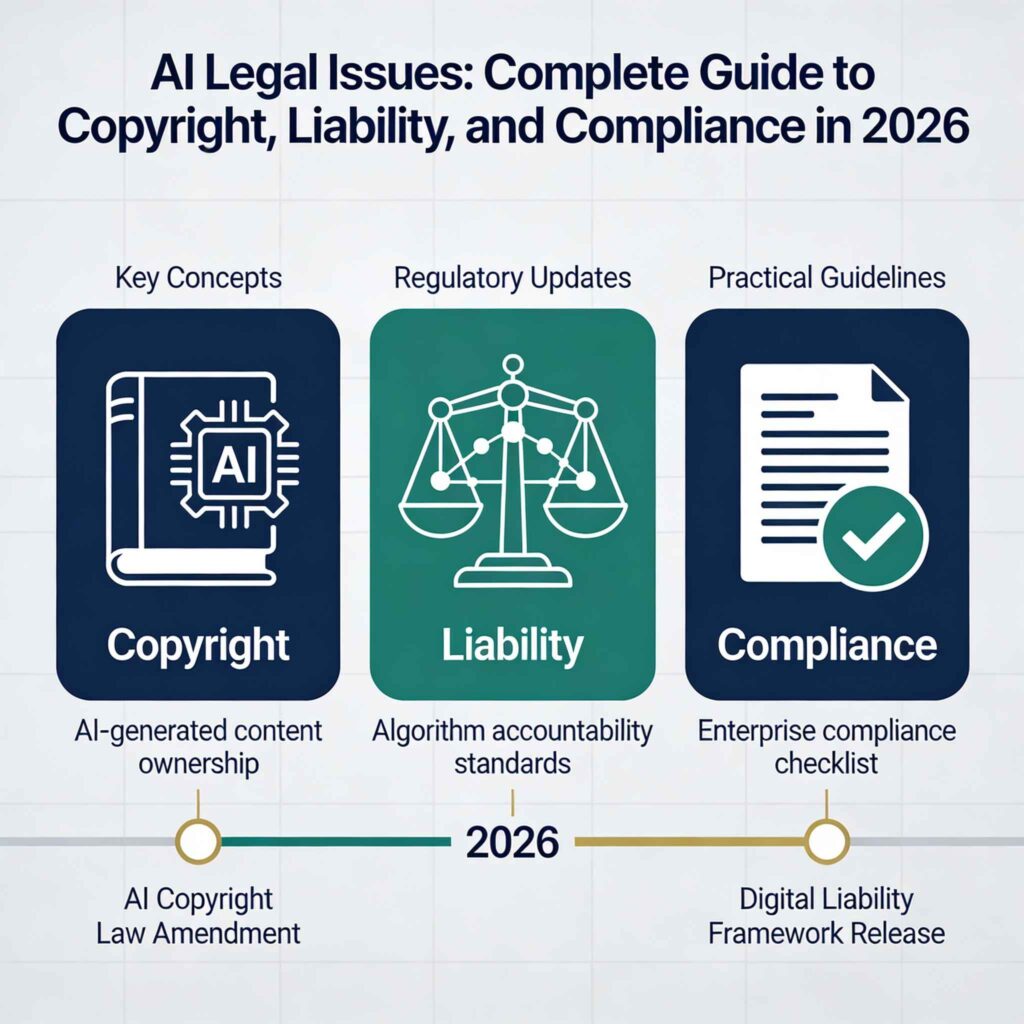

AI Copyright and Intellectual Property: Critical AI Legal Issues

Copyright represents one of the most contentious AI legal issues facing creators, businesses, and AI companies themselves.

Can AI-Generated Content Be Copyrighted?

This question sits at the heart of current AI legal issues debates. The answer varies by jurisdiction but trends toward a clear principle: pure AI-generated content generally cannot be copyrighted.

United States Position:

The U.S. Copyright Office has ruled consistently that copyright requires human authorship. In the landmark Thaler v. Perlmutter case (2023), the court affirmed that AI-generated artwork without human creative input cannot receive copyright protection.

However, AI legal issues become more nuanced with human-AI collaboration. If you provide substantial creative direction, make significant modifications, or use AI as a tool within a larger creative process, copyright protection may apply to your contributions.

Practical Implications:

- Purely AI-generated content enters the public domain immediately

- Competitors can legally copy your AI-generated marketing materials

- You cannot sue for infringement of purely AI-generated works

- Human creative input changes the equation significantly

My Experience with These AI Legal Issues:

A client came to me after discovering competitors had copied their AI-generated product descriptions verbatim. Unfortunately, because the content was purely AI-generated with minimal human modification, they had no legal recourse. This experience taught me to always add substantial human creativity to AI outputs—both for quality and legal protection.

Copyright Infringement Risks When Using AI

The flip side of AI legal issues involves whether AI-generated content infringes existing copyrights. This remains legally unsettled but creates real risks.

The Training Data Problem:

AI models learn from vast datasets that include copyrighted works. When AI generates content similar to training data, infringement questions arise. Multiple lawsuits are currently working through courts:

- Getty Images vs. Stability AI

- Authors Guild vs. OpenAI

- Various artists vs. AI image generators

Current Legal Uncertainty:

Courts haven’t definitively ruled whether AI training on copyrighted works constitutes fair use or infringement. This uncertainty creates AI legal issues for everyone in the chain—AI companies, platform providers, and end users.

Protecting Yourself:

Until courts provide clarity on these AI legal issues, take protective measures:

- Use AI tools with clear indemnification provisions

- Avoid prompts requesting specific copyrighted styles or works

- Don’t generate content explicitly imitating specific creators

- Run important AI outputs through plagiarism detection

- Maintain records of your prompts and creative process

- Add substantial human modification to AI outputs

Intellectual Property in AI-Assisted Inventions

AI legal issues extend beyond copyright into patent law. Can AI-assisted inventions receive patent protection? Who owns inventions that AI helped create?

Current Patent Law Position:

Like copyright, patent law generally requires human inventors. The USPTO and courts have rejected attempts to name AI as an inventor. However, humans who use AI as a tool in the invention process can still receive patents for their innovations.

Employment Considerations:

When employees use AI tools to assist invention, AI legal issues arise regarding ownership:

- Who owns AI-assisted inventions—employee or employer?

- Do existing employment IP agreements cover AI-assisted work?

- How should invention disclosures document AI involvement?

Smart organizations are updating employment agreements and IP policies to address these emerging AI legal issues.

AI Liability and Accountability: Who’s Responsible When AI Causes Harm?

Liability represents another critical category of AI legal issues. When AI systems make mistakes that cause harm, determining responsibility becomes complicated.

The AI Liability Chain

Multiple parties may bear responsibility for AI-caused harms:

AI Developers: Companies creating AI models may face liability for fundamental flaws, biases, or dangerous capabilities in their systems.

Platform Providers: Businesses offering AI tools to users may share liability for harms caused by their platforms.

Deploying Organizations: Companies implementing AI solutions face liability for how they use, configure, and oversee AI systems.

Individual Users: End users may bear responsibility for misusing AI tools or failing to verify AI outputs.

Understanding where you fit in this chain helps you assess your exposure to AI legal issues.

Product Liability for AI Systems

Traditional product liability law applies awkwardly to AI, creating novel AI legal issues:

Manufacturing Defects: These occur when specific units differ from intended design. For AI, this might mean a corrupted model or improper training.

Design Defects: These exist when the fundamental design is unreasonably dangerous. AI systems with known biases or failure modes may qualify.

Warning Defects: Failure to adequately warn users about AI limitations or risks can create liability.

Real-World Cases:

Courts are beginning to address AI product liability:

- Autonomous vehicle accidents have produced settlements and verdicts

- Healthcare AI misdiagnosis cases are emerging

- Financial AI causing consumer harm has triggered enforcement

Professional Liability When Using AI

Professionals face specific AI legal issues when incorporating AI into their practice:

Lawyers: The legal profession has seen multiple cases of attorneys sanctioned for submitting AI-generated briefs containing fabricated case citations. Bar associations are issuing guidance on AI use, and malpractice insurers are adjusting policies.

Healthcare Providers: Medical professionals using AI diagnostic tools must maintain appropriate oversight. Blindly following AI recommendations that harm patients creates malpractice exposure.

Financial Advisors: AI-generated investment advice that causes client losses may trigger fiduciary duty violations.

My Observation on These AI Legal Issues:

The common thread across professions is that AI doesn’t eliminate professional responsibility—it may actually increase it. Professionals must verify AI outputs, maintain expertise to catch errors, and exercise independent judgment. AI is a tool, not a replacement for professional competence.

Contractual Liability Considerations

AI legal issues frequently arise in contractual relationships:

AI-Generated Deliverables:

When you deliver AI-generated work to clients, questions arise:

- Did you disclose AI usage?

- Does AI content meet contractual quality standards?

- Who owns the resulting intellectual property?

- Are you liable if AI content contains errors or infringement?

Best Practices for Contracts:

Address AI legal issues proactively in your agreements:

- Disclose AI usage policies clearly

- Define quality standards explicitly

- Allocate IP rights for AI-assisted work

- Include appropriate limitation of liability clauses

- Require AI disclosure from vendors and contractors

Regulatory Compliance: Navigating AI Legal Issues Across Jurisdictions

Governments worldwide are implementing AI regulations, creating complex compliance obligations and significant AI legal issues for global organizations.

The EU AI Act: Comprehensive AI Regulation

The European Union’s AI Act represents the most comprehensive AI regulatory framework globally. Understanding its requirements is essential for addressing AI legal issues affecting European operations.

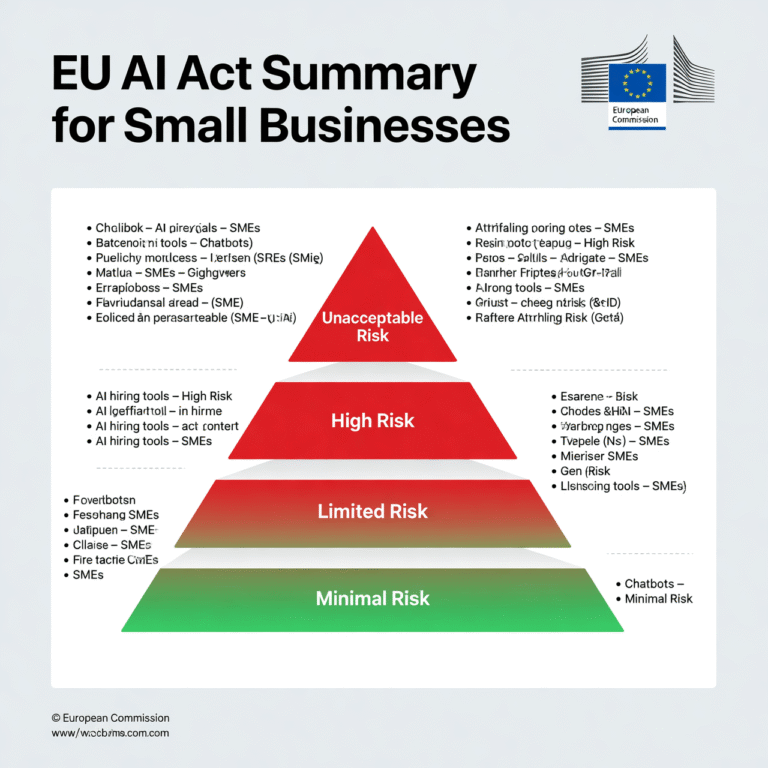

Risk-Based Classification:

The EU AI Act categorizes AI systems by risk level:

Unacceptable Risk (Prohibited):

- Social scoring systems

- Real-time biometric surveillance (with exceptions)

- Manipulation techniques exploiting vulnerabilities

- Emotion recognition in workplaces and schools

High-Risk AI Systems:

- Critical infrastructure management

- Educational and vocational training assessment

- Employment and worker management

- Essential services access (credit, insurance)

- Law enforcement applications

- Border control and asylum systems

Limited Risk:

- Chatbots and conversational AI (transparency required)

- Emotion recognition systems (disclosure required)

- Deepfakes and synthetic content (labeling required)

Minimal Risk:

- AI-enabled games

- Spam filters

- Most business applications

Compliance Requirements:

High-risk AI systems must meet extensive requirements:

- Risk management systems throughout lifecycle

- Data governance and quality standards

- Technical documentation and record-keeping

- Transparency and information for users

- Human oversight capabilities

- Accuracy, robustness, and cybersecurity standards

Penalties for Non-Compliance:

The EU AI Act creates severe penalties that make these AI legal issues impossible to ignore:

- Up to €35 million or 7% of global revenue for prohibited practices

- Up to €15 million or 3% of global revenue for other violations

- Up to €7.5 million or 1.5% of global revenue for incorrect information

United States AI Regulatory Landscape

The U.S. lacks comprehensive federal AI legislation, but AI legal issues arise from multiple regulatory sources:

Executive Orders:

President Biden’s 2023 Executive Order on AI established requirements for:

- AI safety and security standards

- Privacy protections in AI systems

- Civil rights and bias prevention

- Consumer protection

- Worker support and labor standards

Sector-Specific Regulations:

Various agencies address AI legal issues within their domains:

- FDA: AI in medical devices

- FTC: AI in consumer protection and advertising

- EEOC: AI in employment decisions

- SEC: AI in financial services

- HUD: AI in housing decisions

State-Level Regulation:

States are implementing their own AI laws:

- Colorado AI Act (comprehensive AI regulation)

- California AI transparency requirements

- Illinois Biometric Information Privacy Act

- New York City automated employment decision law

International AI Regulations

Global organizations face AI legal issues across multiple jurisdictions:

China:

- Algorithmic recommendation regulations

- Deep synthesis (deepfake) regulations

- Generative AI service regulations

Canada:

- Proposed Artificial Intelligence and Data Act

- Provincial privacy law applications

United Kingdom:

- Principles-based AI regulation approach

- Sector-specific guidance

Brazil:

- AI regulatory framework under development

- Data protection law (LGPD) applications

Practical Compliance Approach:

Given this patchwork of regulations creating AI legal issues:

- Map your AI systems and their geographic scope

- Identify applicable regulations for each system

- Implement controls meeting the strictest applicable standard

- Document compliance efforts thoroughly

- Monitor regulatory developments continuously

- Engage legal counsel for complex situations

AI and Employment Law: Workplace AI Legal Issues

AI in the workplace creates distinct AI legal issues affecting hiring, management, and termination decisions.

AI Bias and Discrimination

AI systems can perpetuate or amplify discrimination, creating serious AI legal issues under employment law.

How AI Bias Occurs:

- Training data reflecting historical discrimination

- Proxy variables correlating with protected characteristics

- Underrepresentation of certain groups in training data

- Optimization targets that disadvantage protected groups

Legal Framework:

Title VII, the ADA, ADEA, and state laws prohibit discrimination regardless of whether a human or AI made the decision. Using biased AI doesn’t provide legal protection—employers remain liable for discriminatory outcomes.

Notable Cases:

Several enforcement actions have highlighted these AI legal issues:

- Amazon scrapped an AI recruiting tool that discriminated against women

- EEOC has initiated investigations of AI hiring tools

- State attorneys general have challenged discriminatory AI systems

AI in Hiring Decisions

AI hiring tools create specific AI legal issues requiring careful management:

Transparency Requirements:

Several jurisdictions now require disclosure of AI in hiring:

- New York City requires notification and bias audits

- Illinois requires consent for AI video interview analysis

- Maryland restricts facial recognition in hiring

Adverse Impact Analysis:

Employers using AI hiring tools should conduct adverse impact analyses to identify discriminatory effects before they create AI legal issues.

Vendor Due Diligence:

When purchasing AI hiring tools, investigate:

- Bias testing methodologies

- Validation studies

- Adverse impact data

- Compliance certifications

- Indemnification provisions

AI Monitoring of Employees

Workplace AI surveillance creates additional AI legal issues:

Privacy Concerns:

AI-powered monitoring of employees raises privacy issues:

- Keystroke logging and productivity tracking

- Video surveillance with facial recognition

- Email and communication analysis

- Location tracking

Legal Limitations:

Various laws restrict workplace monitoring:

- ECPA limits electronic communication interception

- State laws may require monitoring disclosure

- GDPR restricts employee monitoring in Europe

- NLRA protects concerted activity discussions

Best Practices:

Address workplace AI monitoring AI legal issues by:

- Disclosing monitoring practices clearly

- Limiting monitoring to legitimate business needs

- Avoiding monitoring of protected activities

- Complying with applicable privacy laws

- Consulting counsel before implementing monitoring

AI and Worker Displacement

As AI automates tasks previously performed by humans, additional AI legal issues emerge:

WARN Act Considerations:

Large-scale AI-driven workforce reductions may trigger WARN Act notice requirements for mass layoffs.

Reasonable Accommodation:

Replacing human workers with AI may affect disability accommodation obligations if AI cannot provide equivalent accommodation.

Collective Bargaining:

Unionized workplaces may have contractual restrictions on AI implementation affecting bargaining unit work.

AI Disclosure and Transparency Requirements

Transparency obligations create important AI legal issues for content creators, businesses, and platforms.

Content Disclosure Requirements

Various jurisdictions require disclosure when content is AI-generated:

Political Content:

Several states require disclosure of AI-generated political advertising. Federal legislation is pending. Creating deepfakes of political figures without disclosure may violate election laws.

Commercial Content:

FTC guidelines suggest that AI-generated endorsements or reviews may require disclosure. Undisclosed AI content could constitute deceptive practices.

Synthetic Media:

The EU AI Act requires labeling of deepfakes and synthetic content. Platforms face obligations to detect and label AI-generated media.

Platform Transparency Obligations

Platforms deploying AI systems face transparency AI legal issues:

Algorithmic Transparency:

Some jurisdictions require platforms to explain how AI algorithms work:

- EU Digital Services Act requires algorithmic transparency

- Proposed U.S. legislation would mandate algorithmic accountability

- Platform terms of service may create disclosure obligations

User Notification:

When users interact with AI rather than humans, disclosure may be required:

- California bot disclosure law requires notification

- EU AI Act requires transparency for conversational AI

- Industry standards increasingly expect disclosure

Corporate Disclosure of AI Use

Public companies face AI legal issues in their disclosure obligations:

SEC Requirements:

Material AI risks may require disclosure:

- AI-related business risks in risk factors

- AI investments in MD&A discussions

- AI incidents affecting operations

Board Oversight:

Boards increasingly face scrutiny of AI governance. Failure to oversee AI risks adequately could create director liability.

Data Privacy and AI: Interconnected AI Legal Issues

AI systems depend on data, creating significant AI legal issues at the intersection of artificial intelligence and privacy law.

GDPR and AI Compliance

The General Data Protection Regulation creates extensive AI legal issues for organizations processing European data:

Lawful Basis for AI Processing:

AI systems must have lawful bases for processing personal data:

- Consent (difficult for complex AI systems)

- Contractual necessity

- Legal obligation

- Vital interests

- Public interest

- Legitimate interests (requires balancing test)

Automated Decision-Making Rights:

GDPR Article 22 provides rights regarding automated decisions:

- Right not to be subject to solely automated significant decisions

- Right to human review of automated decisions

- Right to explanation of automated decision logic

Data Minimization:

AI systems must collect only necessary data—a principle that conflicts with AI’s data-hungry nature.

U.S. State Privacy Laws and AI

State privacy laws create additional AI legal issues:

California (CCPA/CPRA):

- Right to opt out of automated decision-making

- Right to information about automated processing

- Required risk assessments for certain AI uses

Virginia, Colorado, Connecticut:

- Similar automated decision-making provisions

- Opt-out rights for profiling

- Assessment requirements

Children’s Privacy and AI

AI systems interacting with children face heightened AI legal issues:

COPPA Requirements:

- Parental consent for data collection from children under 13

- Restrictions on behavioral advertising to children

- Data minimization requirements

Emerging Regulations:

- Age-appropriate design requirements

- Restrictions on recommendation algorithms for minors

- Enhanced protections for children’s data in AI training

Practical Steps to Manage AI Legal Issues in Your Organization

Understanding AI legal issues is essential, but implementing practical protections is equally important.

Develop an AI Governance Framework

Create structured oversight for AI legal issues:

AI Inventory:

Document all AI systems your organization uses or develops:

- System purpose and functionality

- Data inputs and outputs

- Decision-making impact

- Regulatory classification

Risk Assessment:

Evaluate AI legal issues for each system:

- Copyright and IP risks

- Liability exposure

- Regulatory compliance requirements

- Privacy implications

Policies and Procedures:

Establish clear guidance:

- Approved AI tools and uses

- Prohibited applications

- Review and approval processes

- Incident response procedures

Implement Contractual Protections

Address AI legal issues in your agreements:

Vendor Contracts:

When purchasing AI tools, negotiate:

- Indemnification for infringement claims

- Compliance representations and warranties

- Data processing agreements

- Audit rights

- Liability allocation

Customer Contracts:

When delivering AI-assisted work:

- Disclose AI usage appropriately

- Define quality standards

- Allocate IP rights clearly

- Limit liability appropriately

Employment Agreements:

Update employee documentation:

- AI usage policies

- IP assignment for AI-assisted work

- Confidentiality regarding AI capabilities

Train Your Team

Ensure staff understand AI legal issues:

General Awareness:

All employees using AI should understand:

- Approved tools and uses

- Disclosure requirements

- Quality verification obligations

- Escalation procedures for concerns

Role-Specific Training:

Provide specialized training for:

- Legal and compliance teams

- HR professionals using AI

- Technical staff developing AI

- Customer-facing employees

Monitor Legal Developments

AI legal issues evolve rapidly. Stay current through:

- Industry association updates

- Legal counsel briefings

- Regulatory agency guidance

- Professional development programs

- Peer network discussions

Frequently Asked Questions About AI Legal Issues

Can I be sued for content AI generates for me?

Yes. You can face liability for AI-generated content in multiple ways. If AI output infringes copyrights, you may be liable as the publisher. If AI content defames someone, you may face defamation claims. If AI-generated professional advice causes harm, professional liability may apply. Always review AI outputs before publishing or using them professionally.

Do I need to disclose when I use AI?

Disclosure requirements vary by context and jurisdiction. Some situations legally require disclosure (political ads in some states, chatbot interactions in California). Other situations make disclosure ethically advisable even without legal mandates. Professional contexts often require disclosure to clients. When uncertain, disclosure is generally the safer approach.

Who owns content I create using AI tools?

Ownership of AI-assisted content involves complex AI legal issues. Purely AI-generated content likely has no copyright protection. Content where you provide substantial creative input may be protectable to the extent of your human contribution. AI tool terms of service may affect ownership rights. Consult an attorney for specific situations.

Are AI companies liable if their tools cause harm?

AI company liability remains legally unsettled. Product liability theories may apply to AI systems. Terms of service typically attempt to limit AI company liability. Indemnification provisions vary between AI providers. The legal landscape is evolving through ongoing litigation. Evaluate liability allocation carefully before relying on AI tools for consequential decisions.

How do I comply with the EU AI Act?

EU AI Act compliance depends on your AI system’s risk classification. Minimal-risk systems have few requirements. Limited-risk systems require transparency measures. High-risk systems face extensive obligations including risk management, documentation, human oversight, and accuracy requirements. Conduct a thorough classification analysis and implement required controls. Consider engaging specialized legal counsel for high-risk applications.

Can employees use AI tools without company permission?

This creates significant AI legal issues for employers. Unauthorized AI use may expose company data, create liability risks, and violate security policies. Organizations should establish clear AI usage policies, provide approved tools for legitimate needs, and train employees on requirements. Shadow AI usage is a growing concern requiring proactive management.

Final Thoughts on AI Legal Issues

AI legal issues will only grow more complex and consequential in coming years. The organizations and individuals who invest in understanding these issues now will be better positioned to harness AI’s benefits while managing its risks.

Throughout my work in this space, I’ve observed that proactive attention to AI legal issues pays dividends. Organizations with clear AI governance avoid costly disputes. Professionals who understand legal boundaries make better decisions. Creators who implement appropriate protections maintain valuable rights.

The legal framework for AI remains incomplete and evolving. Courts are still working through fundamental questions about copyright, liability, and rights. Regulators are implementing new compliance requirements. Legislators continue proposing new AI laws.

This uncertainty shouldn’t paralyze you—but it should motivate careful attention. Monitor developments affecting your AI usage. Implement reasonable protections based on current understanding. Build relationships with legal counsel who understand AI issues. Document your decision-making processes.

AI technology offers remarkable capabilities that can enhance virtually every professional endeavor. Understanding AI legal issues allows you to capture those benefits while protecting yourself from the risks that accompany this powerful technology.

Stay informed. Stay compliant. Stay protected.